Part 27 - How do computer graphics work?

Written by Eric Muss-Barnes, 26 May 2020What are computer graphics? Well, technically, computer graphics are anything which is displayed on a computer screen. But, typically, when people use the phrase "computer graphics" they are simply referring to images and pictures. Understanding computer graphics is not essential to learning how to program a website, but, like anything else in life, the more knowledge you have, the better equipped you will be to grasp the nuances of what you are creating.

Before we talk about graphics and images on computer screens, let's review how we got here in the first place!

Thousands of years ago, human beings started to create pictures with stone carvings, then our technology advanced to paintings, followed by the printing press, and finally to photography; the most advanced form of analog image creation ever invented.

Thousands of years ago, human beings started to create pictures with stone carvings, then our technology advanced to paintings, followed by the printing press, and finally to photography; the most advanced form of analog image creation ever invented.

In the 1920's, we invented the very first electronic images; Television. Many people mistakenly think television was invented in the 1950's. While it is true that a majority of American homes began to own a television set in the 1950's, the technology was actually invented 30 years earlier. Although the technology has advanced dramatically in the last century, the foundational elements of television remain the same today as they did a century ago. Computers also display electronically generated pictures on some type of monitor or screen. A computer screen could be used on a desktop, a laptop, a cellphone, a tablet, or even a watch. Regardless of the format of the screen, the function is always the same; We are converting visual images, into electronic files, which are displayed on screens, as pinpoints of light.

In the 1920's, we invented the very first electronic images; Television. Many people mistakenly think television was invented in the 1950's. While it is true that a majority of American homes began to own a television set in the 1950's, the technology was actually invented 30 years earlier. Although the technology has advanced dramatically in the last century, the foundational elements of television remain the same today as they did a century ago. Computers also display electronically generated pictures on some type of monitor or screen. A computer screen could be used on a desktop, a laptop, a cellphone, a tablet, or even a watch. Regardless of the format of the screen, the function is always the same; We are converting visual images, into electronic files, which are displayed on screens, as pinpoints of light.

I want you to remember that phrase - "pinpoints of light". At some period in your life, I'm sure you played with a toy like a LiteBrite, or just looked really close at a television or computer screen, and you can actually see all the individual little lights which create the image. As we move away from those pinpoints, our mind blends those dots together to form a single image. Much like a pointillist painting. Anyone with a decent art education, or a love for Ferris Bueller, is familiar with the famous pointillist painting "A Sunday Afternoon on the Island of La Grande Jatte" by Georges Seurat. Pointillist paintings use tiny dots of paint to create the image. The smaller those dots of paint are on the canvas, the more detailed the image appears as we move away from it.

I want you to remember that phrase - "pinpoints of light". At some period in your life, I'm sure you played with a toy like a LiteBrite, or just looked really close at a television or computer screen, and you can actually see all the individual little lights which create the image. As we move away from those pinpoints, our mind blends those dots together to form a single image. Much like a pointillist painting. Anyone with a decent art education, or a love for Ferris Bueller, is familiar with the famous pointillist painting "A Sunday Afternoon on the Island of La Grande Jatte" by Georges Seurat. Pointillist paintings use tiny dots of paint to create the image. The smaller those dots of paint are on the canvas, the more detailed the image appears as we move away from it.

Computer screens function in a very similar fashion. Instead of tiny dots of paint, they use tiny dots of light. As we move away from those dots, they blend together and make an image.

There are 2 words you need to know, which measure the quality and the sharpness of that image. Pixels and resolution.

"Pixels" are what we call the little dots, the pinpoints of light.

"Resolution" is a measurement of the quantity of those pixels. Obviously, the more pixels we have, the higher the resolution. The higher the resolution, the more crisp and sharp and clear the image appears. Resolution is typically expressed in a term called Dots Per Inch (DPI) or Pixels Per Inch (PPI).

You follow so far? Pretty simple, right? The physical screen has pixels and resolution. The computer file which generates that image also has pixels and resolution.

A great analog analogy is to imagine your computer monitor is a window screen, and the image file saved in the computer is a pointillist painting, on the other side of the screen.

All the squares in the screen are "pixels" and all the dots of paint are also "pixels". The screen and the painting are two separate things, but they both have pixels. That's why I like using the screen/painting analogy because it becomes far easier to understand. The dots of paint and the squares on the screen are not related in any way, but the density of that screen, combined with the density of the dots of paint, could make for a vastly different looking image.

My analogy is not 100% accurate though, because the pixels on a monitor are actually a virtual thing. When you physically move your eye up close to a monitor, the physical dots you see are tiny diodes which emit light. On average, it takes 3 of those physical diodes to create one virtual pixel. Confusing? Let's go back to the window metaphor. Imagine you have a window that is 3 feet across and 2 feet high. Now, imagine you swap out the screen for screens with different densities. You are changing the resolution of the screen, but the window always remains 3 feet by 2 feet.

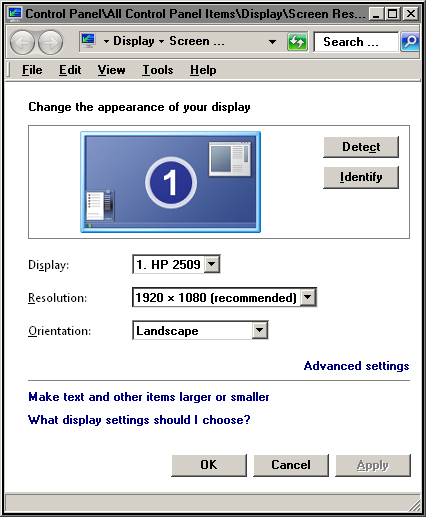

Computer monitors are the same. We can alter the virtual resolution of the display, even though the physical dimensions of the screen never change.

If we look at the screen resolution of a monitor, we can see how it can be changed. As we go down to smaller resolutions, objects on the screen will obviously look larger, because we are using less pixels to generate the image in the same physical space. However, you will also notice there are "recommended" sizes. This is because the monitor is designed to be display an optimal resolution, based upon the capabilities of the electronics.

If we look at the screen resolution of a monitor, we can see how it can be changed. As we go down to smaller resolutions, objects on the screen will obviously look larger, because we are using less pixels to generate the image in the same physical space. However, you will also notice there are "recommended" sizes. This is because the monitor is designed to be display an optimal resolution, based upon the capabilities of the electronics.

Early color computer monitors, starting in the 1990's, typically had a resolution of about 800x600 pixels. Those numbers are telling you the quantity of pixels being shown on the monitor - 800 horizontally and 600 vertically. Like standard definition television sets, these were also known as 4:3 monitors, because the proportion of the dimensions (known as an "aspect ratio") was 4 units across to 3 units in height.

The most common resolution on most monitors in the year 2020 is 1920x1080; also called "high definition" or "HD" monitors. Once again, these have 1,920 horizontal pixels and 1,080 vertical pixels. They are also known as 16:9 monitors because the aspect ratio is 16 units across to 9 units high.

4K monitors have quadruple the resolution of HD or 3840x2160 pixels.

All starting to make sense? When you think of monitor resolutions and their pixels like the dots of pointillist paintings, you start to comprehend why 1920x1080 HD screens are clearer than 800x600 monitors and why 4K images are sharper than HD.

Now that you understand what pixels and resolution are, let's look at the final topic, one which always confuses people: Scaling. Altering the size/scale of images is where people start to get lost when it comes to computer graphics.

Here is an example:

"The pixels are defining the length and width. The DPI defines the clarity of the image. Typically, digital is 72 dpi. However, dpi can also make the file size smaller or larger. For icons like this they can typically have a lower dpi to make the file size in kilobytes the right size for the software."

This is an actual quote I recently had from a client and 60% of the sentences are totally wrong. The ones in green are true. The others are totally false.

DPI does not define the clarity of the image. DPI is simply a measure of the number of pixels available.

DPI does not make a file size smaller or larger. Again, DPI is simply a measure of the number of pixels available.

And that last sentence, "For icons like this they can typically have a lower dpi to make the file size in kilobytes the right size for the software."... I can't even comment on that because it makes no sense. They think the file size of an icon has to fall in a certain range for certain software? There's no such thing! This was written by an alleged graphic designer - someone who should be an expert in this topic. But, even graphic designers can be very poorly educated when it comes to understanding how graphics actually work.

Let's get back to scaling.

As you blow images up to larger sizes or shrink them down to smaller sizes, people never really grasp what is happening. And because we are usually talking about virtual sizes, in some electronic format, it's understandable for people to be a little confused. So, let's take this one step at a time.

Again, let's start with our painting metaphor.

In the physical world, if you are standing in a museum, in front of a pointillist painting, you alter the scale of that image by stepping closer to it, or stepping away from it. You zoom in closer to the pixels or you zoom out.

You can do the same thing on a computer. You can zoom in closer or zoom out further from an image. You can do this numerous different ways. You can zoom on your monitor settings. You can zoom on a web browser. You can zoom in a graphics program. No matter how you are zooming in on an image, you are not altering the actual file. You are simply altering your relative virtual distance from it. This is why most applications use a magnifying glass to represent the zoom function. Just like holding a magnifying glass over a painting, you're not altering the painting, you're just altering your view of it.

You can also scale or resize the actual file itself in a graphics program. This process of making an image larger or smaller is called "interpolation". A program like Photoshop or GIMP will let you scale an image file up or down.

Below we see 3 versions of a progressively smaller image. The webpage is setting all 3 images to be 200 pixels wide. But, if you open them in new tabs, you will see they are 50, 100 and 200 pixels wide respectively. The browser is interpolating them up to 200 pixels wide, so the quality of each image looks very different.

Let's pretend you have an image 216 pixels high and 216 pixels wide. A typical monitor needs about 72dpi to display a clear and crisp image. So, if you have a 216x216 pixel image, you can show that on a monitor at about 3" across. But, let's suppose you wanted to fill the entire background of a full 1920x1080 monitor. You can scale that 216 pixel image up to 1080x1080 but the computer will have to guess how to increase the necessary quantity of pixels. This "guesswork" is known as "interpolation" and it's why small images look blurry or "fuzzy" when you blow them up to a larger size.

Now, suppose you wanted to go the other way.

Suppose you had a very large photograph and you wanted to shrink it down to use it on a webpage. This always looks really good, because computers are great at removing data to interpolate an image down to a smaller size. They don't have to use complex mathematical algorithms to guess and fabricate imaginary pixels. They simply remove pixels which exist.

Here we see 3 versions of a progressively larger image. The webpage is setting all 3 images to be 200 pixels wide. But, if you open them in new tabs, you will see they are 200, 400 and 800 pixels wide respectively. The browser is interpolating them down to 200 pixels wide, so the quality of each image looks very similar.

So, remember that. Interpolating large images down into small images works great. Interpolating small images up into large images works terribly.

The native resolution of a computer monitor is usually 72dpi while the optimal resolution of a printed image is usually 300dpi.

So, for example, if you want to print a 4" x 6" photograph, it should be about 1200x1800 pixels in size; that's 300 times 4 and 300 times 6. If you have an image five times that size, let's say 6000x9000 pixels, it's not going to look any better as a 4x6, because the printer is only producing 300dpi. So, all those extra pixels are useless. But, if you wanted a 20" x 30" photo, that 6000x9000 image would be ideal. If you tried to blow up the 1200x1800 image into 20x30, it would look blurry and fuzzy, because it wouldn't have enough pixels to produce a clear image.

Now you understand why you need large image files to print large photographs at a good quality, and why you still need a decent resolution for website images as well. You can't take a 480x270 pixel image and make it look nice as a background on a 1920x1080 monitor, because it will be blown up to 4 times the original size and the upscaling interpolation will make it look blurry. But if you had a big 5760x3240 image and you scaled it down to 1920x1080, it would look great on a monitor, because downscaling interpolation works really well.

Thank you so much for taking the time to check out my little lesson on computer graphics. These are basic design and graphics concepts I have been wanting to teach and explain for many years, so it's been a lot of fun to finally create a little dissertation on this subject.

And remember, kids, the world owes you nothing... until you create things of value.

Glossary

aspect ratio

The proportions of the dimensions of a image, conveying the relation of width-to-height, expressed in two numbers separated by a colon. For example, a square has a 1:1 aspect ratio, while a horizontal rectangle which is 3 times as wide as it is tall, would be a 3:1 aspect ratio. This ratio can be any linear measurement; feet, inches, meters, centimeters, anything. So, for example, a 16:9 television which is 32" wide is going to be 18" tall.computer graphics

Any visuals displayed on a computer screen or monitor. Typically used only to describe images and pictures.DPI/PPI

DPI is an abbreviation for "Dots Per Inch" while PPI is an abbreviation for "Pixels Per Inch". These unites of measurement tell how many pixels in an image will equate to one inch of space in the real world; either on a monitor (typically 72dpi) or on paper (typically 300dpi).4K

Computer monitors and television with a resolution of 4096x2160 pixels.HD

HD is an abbreviation for "High Definition". Computer monitors and television with a resolution of 1920x1080 pixels.interpolation

The process a computer uses to mathematically scale images into larger and smaller sizes.pixels

The electronic "dots" used to generate an image. Note screens and monitors have physical pixels and virtual pixels. Image files also contain pixels. So, the word "pixels" can apply to 3 different things. 90% of the time, people use "pixels" to refer to the pixels in an image. 9.99% of the time, they are talking about the virtual monitor resolution. 0.01% of the time they are talking about physical pixels in a monitor; in other words, it's not really discussed ever, unless maybe you're an engineer who designs monitors.resolution

The quantity of pixels in an image. Also the quantity of pixels being displayed on a computer monitor.Other Articles